In my last post, I point out that it makes as much sense to compare black scores in Boston and Detroit as it does to compare white scores in Vermont and West Virginia (not that people don’t do that, too), given the substantial difference in black poverty rates.

There are all sorts of actual social scientists investigate race and poverty, and I’m not trying to reinvent the wheel. I don’t need to prove that poverty has a strong link to academic achievement. Apparently, though, some people in the education industry need to be reminded. So part 2 of my rationale for digging into the poverty rates (with the first being lord, they’re hard to find) is that I wanted to remind people that we need to look at both factors. Ultimately, it doesn’t matter if my data analysis here is correct or I screwed it up. If people start demanding to know how poverty affects outcomes controlled for race—whether my analysis is correct or not—then this project has been worthwhile. Even given the squishy data with various fudge factors, there appears to be a non-trivial relationship, as you’ll see.

But the third part of my rationale for taking this on is linked to my curiosity about the data. Would it support—or, more accurately, not conflict with—my own pet theory?

I expected that my results would show a link between poverty and test scores after controlling for race, although given the squishiness of both the data I was using, the small sample size and NAEP’s sampling (which would be by NSLP participation, not poverty), I didn’t expect it to explain all of the variance.

But I also think it likely that poverty saturation, for lack of a better word, would have an additional impact. So Detroit has lots of blacks, Fresno doesn’t. But they both have a high rate of overall poverty, and since poverty is correlates both with low ability and, alas, low incentive, the classes are brutally tough to teach with all sorts of distractors. Disperse the poor kids and far more of them will shrug and pay attention, with only a few dedicated troublemakers determined to screw things up no matter what the environment.

This is hardly groundbreaking; that belief is behind the whole push for economic integration, it’s how gentrifiers are rationalizing their charter schools, and so on. I don’t agree with the fixes, and of course I don’t think that poverty saturation explains the achievement gap, but I believe the problem’s real enough to singlehandedly account for the small and functionally insignificant increase in some charter school test scores. I have more thoughts on this, but it would distract from my main purpose here, so hold on to that point. For now, I was also digging into the data for my own purposes, to see if it didn’t contradict my own idea of poverty’s impact.

Poverty Variables

I thought these rates might be related, all for the districts (not the cities):

- Percentage of enrolled black students in poverty (as a percentage of all black students)

- Percentage of enrolled black students in poverty (as a percentage of all students)

- Percentage of enrolled poor kids

- Percentage of enrolled poor black kids (as a percentage of all poor kids)

- Percentage of blacks in poverty (overall, adults and kids, from ACS)

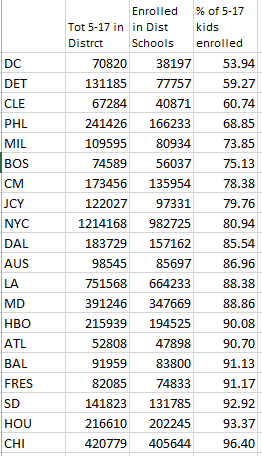

In my last post, I discussed the difficulty of assigning the correct number of poor black students to the district. Should I assume the enrolled poverty rate is the same as the district poverty rate for black and poor children, or assume that the bulk of the poor children enrolled in district schools, thus raising the poverty rate? This makes a huge difference in schools that only enroll 50-60% of the district students. I decided to assign all the poor kids to the district schools, which will overstate the poverty levels, but nowhere near as much as the reverse would distort them. So all the above poverty levels involving enrolled students assume that all poor kids enroll in district schools–that is, I used the far right row of each of the three poverty measures shown in the table below.

(Notice that in a few cases, the ACS poverty level is higher than the assigned poverty rate, which is nutty. But I’m creating the black child poverty rate by adding up children in and children not in poverty, rather than using children in poverty and total black children, to be consistent.)

Boston was the only school district I could find that provided data on how many district kids weren’t enrolled, what percent by race, and where they were (parochial, private, charters, homeschooled). Thanks, Boston!

How likely was it that all these kids were evenly pulled from every level of the income spectrum?

I also don’t think it’s a coincidence that the weakest schools have the greatest discrepancies in the two calculations. Particularly of interest is DC, which has a low black poverty rate, a low enrollment rate (because half the kids are in charters), and one of the lowest performers using my test metric (see below) Given that no one has established breathtakingly different academic performances between charter and public schools, it doesn’t seem likely that DC’s lower than expected performance is caused by purely by crappy teaching of a mostly middle class crowd.

Plus, I’m a teacher in a public school, and like most teachers in public schools, I see charter-skimming in action. I see the top URM kids go off to charter schools from high poverty high schools, and I see the misbehavers get kicked back to the public schools. To hell with the protestations and denial, I see cherrypicking in action. And there you see emotions at play. But only after two logical arguments.

So all the bullet points except the last one use that same assumption. And I know it’s a fudge factor, but it’s the best I could do. Here’s hoping the feds will give us a better measure in the future.

Other Variables

- Percent of district kids enrolled (using ACS data and school/census enrollment numbers)

- Percent of enrolled kids who are black (from district websites)

- Percent of black students scoring basic or higher in 8th grade math

I decided to go with basic or higher because seriously, NAEP proficiency is just a stupidly high marker. This is the value I used as the dependent variable in the regressions.

Analysis and Results

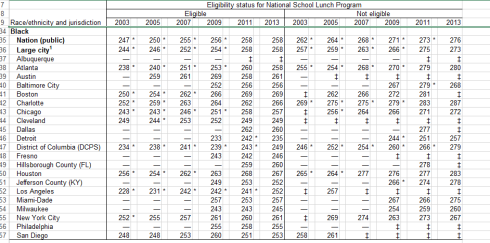

What I looked for: well, hell. I don’t do math, dammit, I teach it. I figured I’d look for the highest R squared I could find and p-values between 0 and .05. When I started, I’d have been thrilled with anything explaining over 50% of the variation, so I decided that I’d give the results if I got 40% or higher for any one variable, and over 60% for multiple regressions. I used the correlation table to give me pointers:

The red and black is just my own markings to see if I’d caught all the possibilities. Red means no value in multiple regressions, bold means there’s a strong correlation, italic and bold means it might be a good candidate for multiple regressions. As I mention below, I kind of run out of steam later, so I’m going to come back to this to see if I missed any possibilities.

I don’t usually do this sort of thing, and I don’t want the writing to drown in figures. So I’ll just link in the results.

| Single | % Poor Enrolled (Approx) | poor blks/Tot kids | % Black Enrollment (frm dist) | % Poor Kids in District | Dist Overall Blk Pov | |

| % poor blacks enrolled (approx) | 0.463 | 0.607 | 0.593 | |||

| % Poor Enrolled (Approx) | 0.398 | 0.700 | ||||

| poor blks/Tot kids | 0.516 | 0.640 | ||||

| % Black Enrollment (frm dist) | 0.217 | 0.612 | ||||

| % Poor Kids in District | 0.160 | |||||

| % blk kids poor in dist (ACS) | 0.319 | |||||

| Dist Overall Blk Pov | 0.488 | |||||

| % of 5-17 kids enrolled | 0.216 | |||||

| Poor blcks/Poor | 0.161 |

I ran some of the other multiple regressions and am pretty sure I didn’t get any other strong results, but honestly, yesterday I just ran out of steam. I have a brother showing up to help me move on Saturday, and he’ll be pissed if I’m not packed up. Normally I’d just put this off, but I’ve got two or three other “put offs” and I’m close enough to “done” on this that I want it over.

Scatter plots for the single regressions:

- Black Poverty as percent of Total Black Enrolled

- Black Poverty as percent of Total Enrolled

- Enrolled Student Poverty

- District Overall Black Poverty

Apparently you can’t do a scatter plot for multiple regressions. Here’s what I did just to see if it worked, using the winning multiple regression of Overall Black Poverty and Total Enrolled Poverty:

I calculated the predicted value for each district using the two slopes and the y-intercept. Then I graphed predicted versus actual scores on a scatter plot and added a trend line. Is it just a coincidence that the r square of the trendline is the same as the r square for the multiple regression? I have no idea. If this is totally wrong, I’ll kill it later, but I’m genuinely curious if this is right or wrong, or if Excel does this and I just don’t know how to tell it to graph multiple regressions.

Again, I’m not trying to prove anything. I believe it’s already well-established that poverty within race correlates with academic outcomes. I was just trying to collect the data to remind people who discuss NAEP scores in the vacuum of either race or poverty that both matter.

And here, I’m going to stop for now. I am deliberately leaving this open-ended. If I didn’t screw up and if I understand the stats behind this, it appears that certain black poverty and overall poverty factors explain anywhere from 40 to 60% of the variance in the NAEP TUDA scores. Overall district poverty and total enrolled poverty combine to explain 70%. In my fuzzy, don’t fuss me too much with facts world view, this doesn’t contradict my poverty saturation theory. But beyond that, I want more time to mull this. I’ve already noticed some patterns I want to write more about (like my doctored black poverty number wasn’t as good as overall district black poverty, but my doctored total poverty number worked well—huh), but I’m feeling done, and I’d really like to get feedback, if anyone’s interested. I’m fine with learning that I totally screwed this up, too. Unlike the last post, where I feel pretty solid on the data collection, I’m new at this. If you want to see the very messy google docs file with all the data, gmail me at this blog name.

Two posts in two days is some sort of record for me–and three posts in a week to boot.

I’ll have my retrospective post tomorrow, I hope, since I’ve posted on Jan 1 every year of my blog so far. Hope everyone has a great new year.